You’re sitting at your desk. The pressure is on. You’re trying to get a ridiculous amount of work done before close of business. Your coworker feels your pain and asks “Want another coffee?” In spite of the fact that you’re now four large long blacks in you reply “Heck yes!” It’s crazy. There’s just no way you’re going to be able to get it all done in time. URGHHH.

If only you could clone yourself.

Maybe.

Just maybe.

You might be able to get it all done.

You remember seeing something on your feed about AI and Neural Networks. *MAJOR LIGHTBULB MOMENT* I just need one of those and I’ll be on the home run.

Okay. Stop right there. Neural Networks are awesome and can do a whole heap of things but let’s just take a step back one moment. They’re not the be all and end all!

So what are they then?

What is a Neural Network?

Neural networks try to mimic how your brain works. Huh?! Yep, you heard me right. Neural networks, but more specifically Artificial Neural Networks i.e. not your brain, take an input apply a whole bunch of processes (computation) and produce an output.

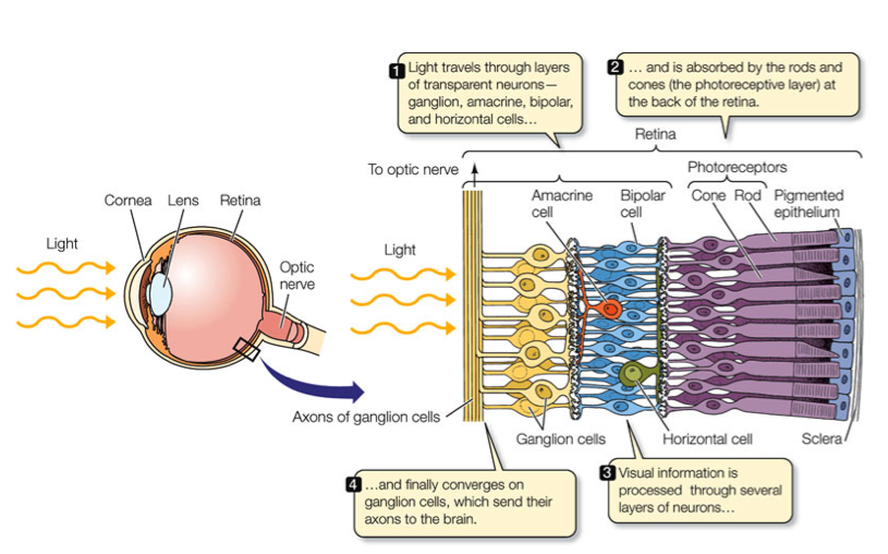

Your brain is the most advanced neural network on this planet. It is literally state of the art. That’s why thousands of researchers around the world are trying to replicate it abilities. For example, you probably undervalue how many calculations your brain is completing each and every day. Take for example the ability to see. What’s actually happening here?

Your eyes receive light. The light is processed by neurons. Those neurons send their signals to layers of photoreceptor cells which are eventually sent to the brain’s axons. So basically, your eyes receive an input (light), there’s a lot of behind the scenes processing and your brain receives the output (electrical signals) which allow it to see.

Hyper simplified but you get the idea. Artificial Neural Networks work the same way. They take some form of input, apply processing and produce an output.

How do Neural Networks work?

Neural networks have three key parts:

- Layers

- Neurons

- Synapses (aka weights)

Layers

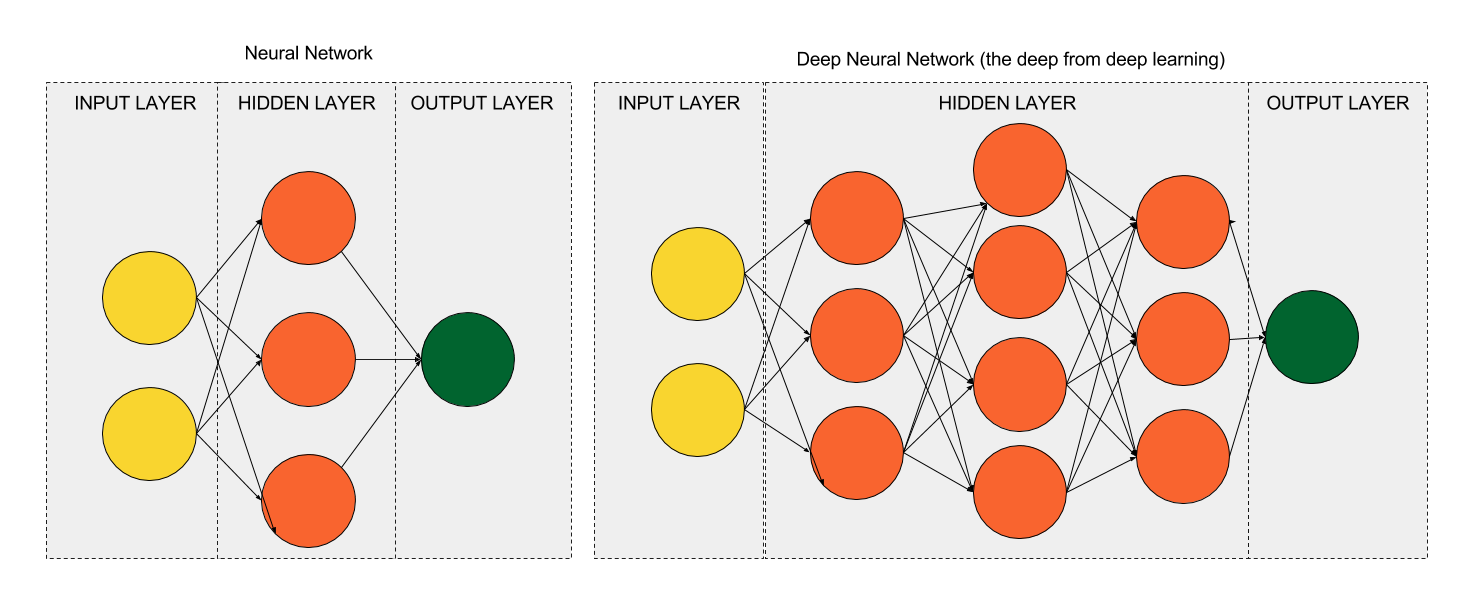

Layers are broken up into three groups. An input layer, a hidden layer, and an output layer.

The input layer is where you pass data or values into the neural network. In the example using your eyes above, this is the process of your eyes receiving light.

The hidden layer is where processing occurs. This is where a neural network takes the inputs and converts them into a series of values which get passed either to another hidden layer or to an output layer. If a neural network has more than one hidden layer it is known as a deep neural network hence the term deep learning.

Finally, the output layer. This is where the results of your neural network are presented and collected. e.g. your brain interpreting vision and ‘seeing’.

These diagrams are super simplified but get the idea across. The YELLOW cells are part of the input layer, the ORANGE cells are part of the hidden layer and the GREEN cells are part of the output layer.

Neurons and weights

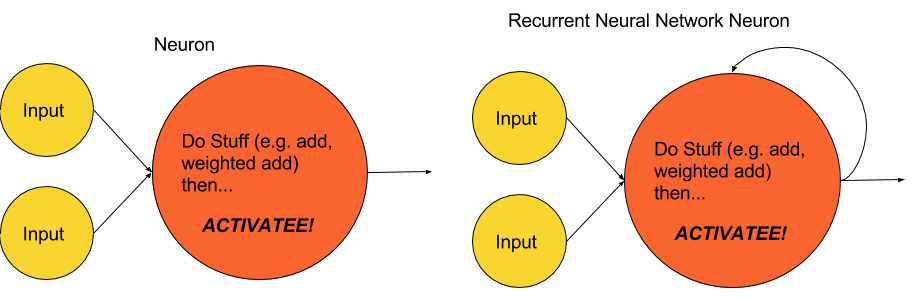

Neurons are nodes which receive an input, process that information and output a result.

Easy right?

For example neurons in your eyes take light, process the light waves and output an electrical signal to your brain.

Better yet, think of a juicer as a neuron. You pass it an apple, it applies a process (juicing the apple) and outputs juice. The juice can then be passed into a fermenter to produce delicious cider (another neuron) or had as a drink (part of the output layer).

Ignore the neuron on the right for now.

Neurons in a neural network work pretty much the same way described above. They are passed inputs, things are done with the inputs and an output is sent out.

Up until now, we’ve sort of ignored the lines connecting each of the neurons. These are super important because later on they allow us to tune/train a neural so it predicts well.

These lines are known as weights or synapses.

Before an input is passed to a neuron it is multiplied by a weight (usually between 1 or -1) this helps to determine whether that particular neuron is switched on or not. I.e. if an input is multiplied by a weight of 0 it’s effectively shut off.

When a neural network is first made these weights are usually random numbers. But these are optimized when the model is trained through a process called backpropagation. This works by trying different values of weights so that the difference between the neural network’s prediction and the actual value is minimised. This process is typically sped up using a method called gradient descent and a parameter called a learning rate. This YouTube video explains this pretty well.

Types of Neural Networks & Applications

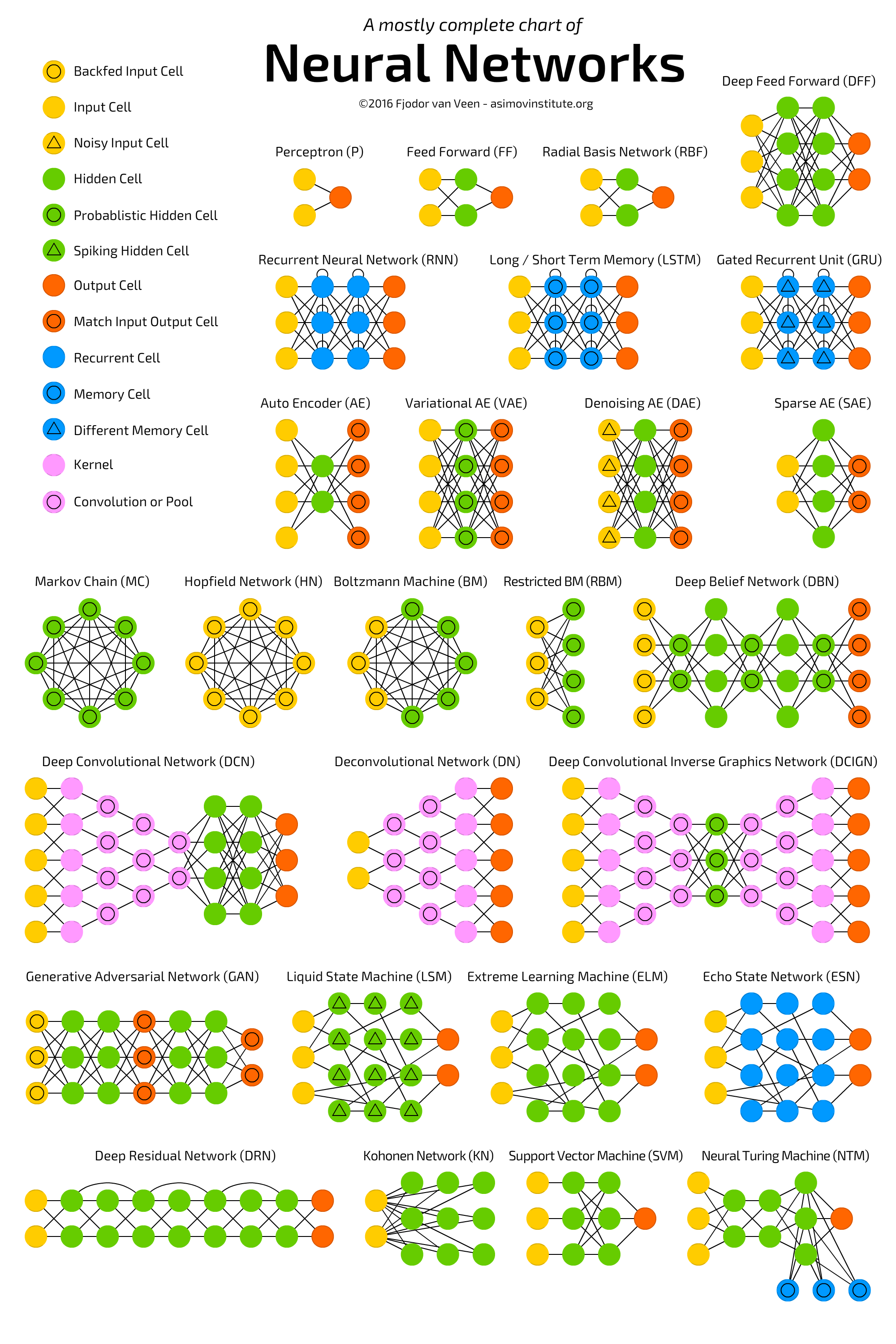

Image Cred: The Azimov Institute

Neural networks aren’t new. In fact the first paper proposing the idea was written in 1943 by Warren McCulloch. So, they’ve had time to settle and evolve. One clear example of this is the diagram above from The Azimov Institute showcasing the many awesome types.

But…the three main types you need to know about (at least for now) are….

Feedforward Neural Networks

This is probably as basic as it gets. It’s a neural network that has three or more layers that feeds information forward. That means that information does not flow back into previous layers or neurons. An example of this is a multi layer perceptron, don’t let the name fool you it’s just technical way of saying it’s a network that’s used for classifying things. Check out this example on github.

Convolutional Neural Networks

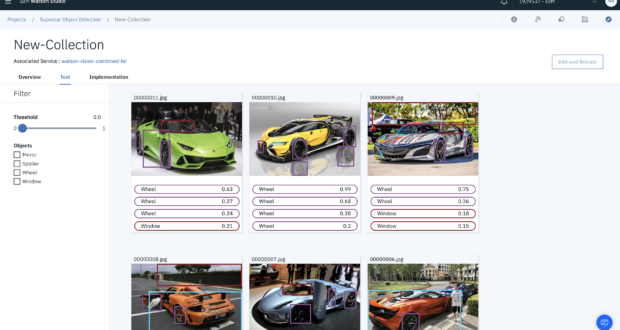

Convolutional neural networks are used widely in image recognition. They perform extremely well because they help preserve spatial information or how each input relates to another based on its proximity. I.e. they work because they analyse information based on groupings or closeness.

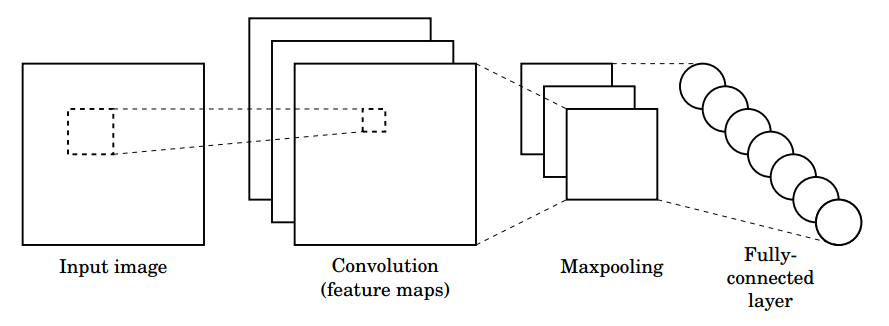

Image Cred: vaetas

They achieve this by having special layers within the hidden layer called convolutional and pooling layers. Convolutional layers help the neural network find small segments or features of an image. Pool layers help bring it all together by determining the overall placement of each convolutional pool.

Say you’re training a neural network to recognise images of a bus. The convolutional layer might be trained to recognise wheels so that the neural net knows it’s a mode of transport and a pooling layer which recognises the overall frame of the bike. Convolutional layers analyse lower level segments of an image i.e. the wheel of bus.

This github tutorial helps get a better understanding a put it into practice.

Recurrent Neural Networks

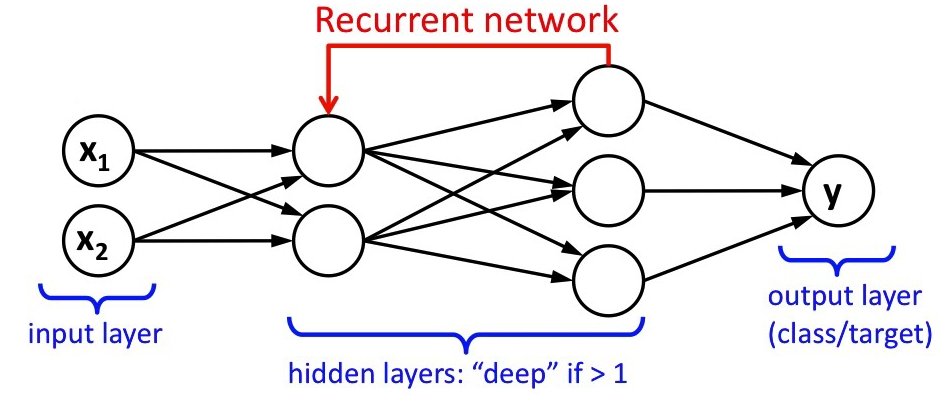

Image Cred: leonardoaraujosantos

Up until now the types of neural networks described have been feedforward networks. I.e. the Inputs flow through the hidden state all the way through to the outputs. A recurrent neural network is slightly different in that the synapses don’t just go in one direction they can feed information back to a previous layer as shown above. This acts a memory, allowing the neural network to ‘remember’ inputs that came before the current input. This is especially useful for natural language generation and language translation. This tutorial goes through how to use a derivative of recurrent neural networks called Long Short Term Memory (LSTM) models to tackle the Stanford Natural Language Inference Corpus. Try it out yourself! Make sure you setup your deep learning environment before you give it a go.

There you have it…the super simple guide to neural networks. Don’t just read this! Give it a go, start coding and clicking buttons it’ll help it sink it a whole lot faster!